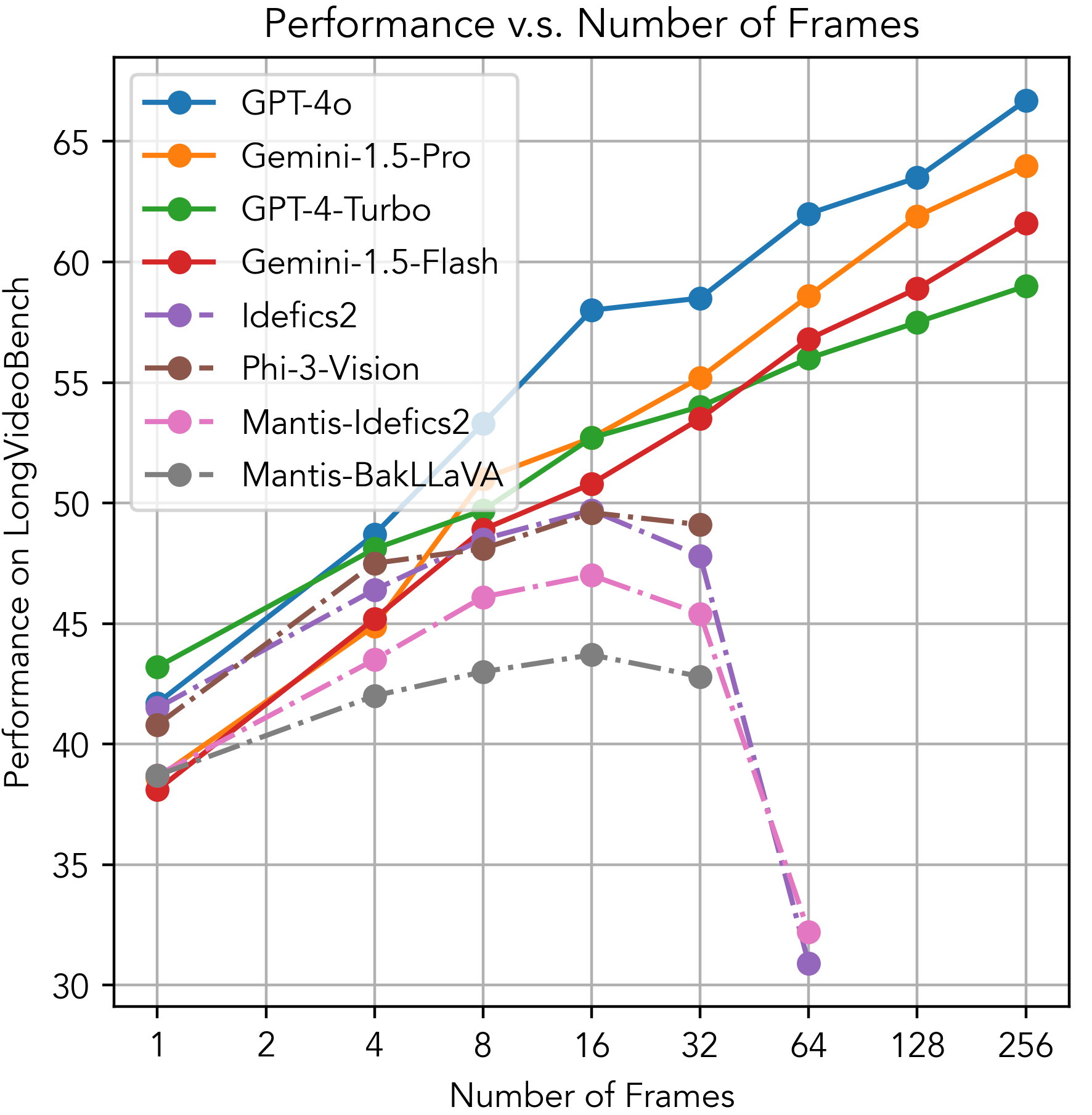

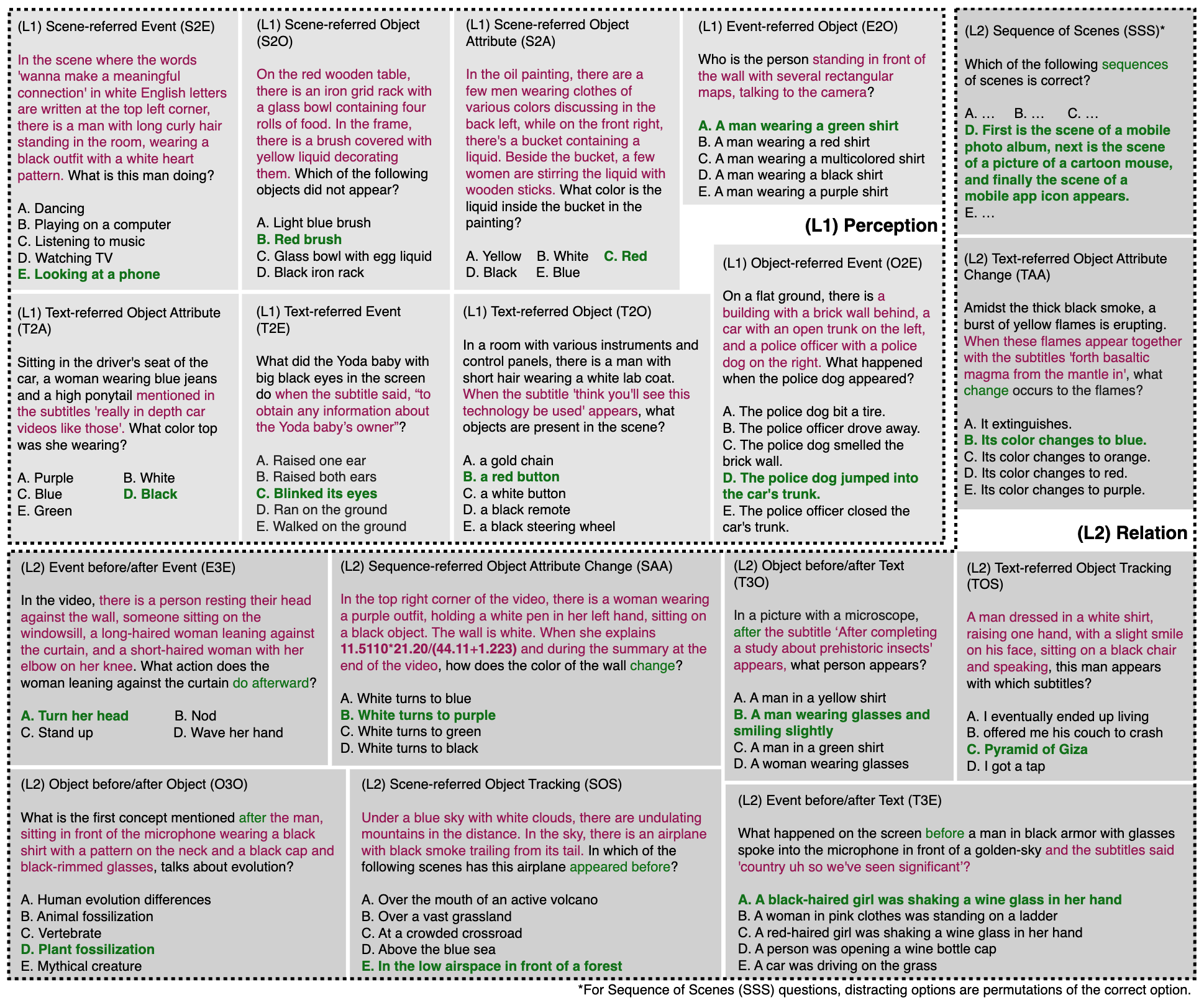

LongVideoBench

LongVideoBench

A Benchmark for Long-context Interleaved Video-Language Understanding

Code

Dataset

Leaderboard

arXiv